Acquire Data for Classification

Big Ideas¶

Cache your data to speed up your data acquisition.

Helper functions are your friends.

Objectives¶

By the end of the acquire lesson and exercises, you will be able to...

- read data into a pandas DataFrame using the following modules:

pydataset

from pydataset import data

df = data('dataset_name')

seaborn datasets

import seaborn as sns

df = sns.load_dataset('dataset_name')

read data into a pandas DataFrame from the following sources:

an Excel spreadsheet

a Google sheet

Codeup's mySQL database

pd.read_excel('file_name.xlsx', sheet_name='sheet_name')

pd.read_csv('filename.csv')

pd.read_sql(sql_query, connection_url)

- use pandas methods and attributes to do some initial summarization and exploration of your data.

.head()

.shape

.info()

.columns

.dtypes

.describe()

.value_counts()

create functions that acquire data from Codeup's database, save the data locally to CSV files (cache your data), and check for CSV files upon subsequent use.

create a new python module,

acquire.py, that holds your functions that acquire the titanic and iris data and can be imported and called in other notebooks and scripts.

import pandas as pd

import numpy as np

from pydataset import data

import os

import matplotlib.pyplot as plt

import seaborn as sns

# ignore warnings

import warnings

warnings.filterwarnings("ignore")

from env import host, user, password

data('iris', show_doc=True)

df_iris = data('iris')

Print the first 3 rows.¶

df_iris.head(3)

Print the number of rows and columns (shape).¶

df_iris.shape

Print the column names.¶

df_iris.columns

# Return a nice list of columns if I want to grab and use them later.

df_iris.columns.to_list()

Print the data type of each column.¶

# Return just data types.

df_iris.dtypes

# Return data types plus.

df_iris.info()

Print the summary statistics for each of the numeric variables.¶

- Would you recommend rescaling the data based on these statistics?

All of the numeric variables in the iris dataset are in the same unit of measure, cm, so I don't see a need to scale them.

IF there were a very large range in our feature values, even though they were measured in the same units, it might be beneficial to scale our data since a number of machine learning algorithms use a distance metric to weight feature importance.

stats = df_iris.describe().T

stats

stats['range'] = stats['max'] - stats['min']

stats[['mean', '50%', 'std', 'range']]

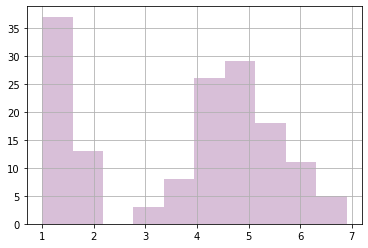

# I can do a quick visual of the variable distributions if I want.

df_iris['Petal.Length'].hist(color='thistle')

plt.show()

Read Table1_CustDetails the excel module dataset, Excel_Exercises.xlsx, into a dataframe, df_excel.¶

df_excel = pd.read_excel('Spreadsheets_Exercises.xlsx', sheet_name='Table1_CustDetails')

df_excel.info()

I'm going to take care of these categorical variables with numeric data types right now. This will make the rest easier.

df_excel = df_excel.astype({"is_senior_citizen": "object", "phone_service": "object",

"internet_service": "object", "contract_type": "object"})

df_excel.info()

Assign the first 100 rows to a new dataframe, df_excel_sample.¶

df_excel_sample = df_excel.head(100)

df_excel_sample.shape

Print the number of rows of your original dataframe.¶

df_excel.shape[0]

Print the first 5 column names.¶

df_excel.columns[:5]

Print the column names that have a data type of object.¶

df_excel.select_dtypes(include='object').head()

df_excel.select_dtypes(include='object').columns

Compute the range for each of the numeric variables.¶

- I can choose to look just at the numeric variables quickly like this. If I was going forward with this DataFrame, I would change the data types of numeric categoricals to object data types. I'll wait and do this below with the DataFrames I will use going forward.

telco_stats = df_excel.describe().T

telco_stats

telco_stats['range'] = telco_stats['max'] - telco_stats['min']

telco_stats

Read the data from a Google sheet into a dataframe, df_google.¶

# Grab the Google sheet url.

sheet_url = 'https://docs.google.com/spreadsheets/d/1Uhtml8KY19LILuZsrDtlsHHDC9wuDGUSe8LTEwvdI5g/edit#gid=341089357'

# Turn Google sheet address into a CSV export URL.

csv_export_url = sheet_url.replace('/edit#gid=', '/export?format=csv&gid=')

# Read in the data using the pandas `pd.read_csv()` function.

df_google = pd.read_csv(csv_export_url)

Print the first 3 rows.¶

df_google.head(3)

Print the number of rows and columns.¶

df_google.shape

Print the column names.¶

df_google.columns.to_list()

Print the data type of each column.¶

- Again, there are numeric data types here that are really categorical variables, so I'll take care of them right here.

df_google.dtypes

df_google.info()

df_google = df_google.astype({'PassengerId': 'object', 'Survived': 'object', 'Pclass': 'object'})

df_google.info()

Print the summary statistics for each of the numeric variables.¶

- Some of these numeric columns are really categorical values, and I can make that change here if I want. I could also just note this observation and take care of this when I prepare my data.

df_google.describe().T

Print the unique values for each of your categorical variables.¶

- Some of these categorical variable columns have a ton of unique values, so I'll check the number first. If I want to see the unique values, I can do a

.value_counts()on individual columns.

for col in df_google:

if df_google[col].dtypes == 'object':

print(f'{col} has {df_google[col].nunique()} unique values.')

df_google.Survived.value_counts(dropna=False)

df_google.Pclass.value_counts(dropna=False)

df_google.Sex.value_counts(dropna=False)

df_google.Embarked.value_counts(dropna=False)

acquire.py Functions¶

- Make a function named get_titanic_data that returns the titanic data from the codeup data science database as a pandas data frame. Obtain your data from the Codeup Data Science Database.

def get_connection(db, user=user, host=host, password=password):

return f'mysql+pymysql://{user}:{password}@{host}/{db}'

def new_titanic_data():

sql_query = 'SELECT * FROM passengers'

df = pd.read_sql(sql_query, get_connection('titanic_db'))

df.to_csv('titanic_df.csv')

return df

def get_titanic_data(cached=False):

'''

This function reads in titanic data from Codeup database if cached == False

or if cached == True reads in titanic df from a csv file, returns df

'''

if cached or os.path.isfile('titanic_df.csv') == False:

df = new_titanic_data()

else:

df = pd.read_csv('titanic_df.csv', index_col=0)

return df

titanic_df = get_titanic_data(cached=False)

titanic_df.head()

- Make a function named

get_iris_datathat returns the data from the iris_db on the codeup data science database as a pandas DataFrame. The returned DataFrame should include the actual name of the species in addition to the species_ids. Obtain your data from the Codeup Data Science Database.

def new_iris_data():

'''

This function reads the iris data from the Codeup db into a df,

writes it to a csv file, and returns the df.

'''

sql_query = """

SELECT species_id,

species_name,

sepal_length,

sepal_width,

petal_length,

petal_width

FROM measurements

JOIN species

USING(species_id)

"""

df = pd.read_sql(sql_query, get_connection('iris_db'))

df.to_csv('iris_df.csv')

return df

def get_iris_data(cached=False):

'''

This function reads in iris data from Codeup database if cached == False

or if cached == True reads in iris df from a csv file, returns df

'''

if cached or os.path.isfile('iris_df.csv') == False:

df = new_iris_data()

else:

df = pd.read_csv('iris_df.csv', index_col=0)

return df

iris_df = get_iris_data(cached=False)

iris_df.head()

I'm going to test these in a new notebook using my darden_class_acquire.py module. Once I know they read my data into pandas DataFrames, I'm ready to clean and prep my data.